The Visual Computing Group (May 2012)

From the left: top row: Roberto, Alex, Marcos, José; second top row: Antonio, Ruggero; middle row: Fabio M., Gianni, Enrico, Fabio B.; Bottom row: Marco, Katia, Cinzia, Emilio. Photo taken outside our lab in Pula on May, 2012.

Digital Mont'e Prama - Museum Installation (Cagliari, May 2014)

We digitally acquired and reproduced 37 Nuragic statues at 1/4 mm resolution. Interactive exploration systems have been installed in Cagliari, Cabras, Rome, and Milan

Digital Mont'e Prama - Museum Installation (Cabras, May 2014)

We digitally acquired and reproduced 37 Nuragic statues at 1/4 mm resolution. Interactive exploration systems have been installed in Cagliari, Cabras, Rome, and Milan

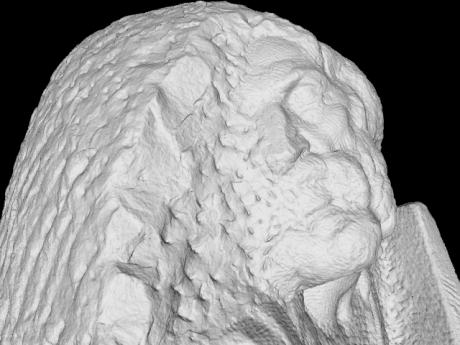

Digital Mont'e Prama - Shape and color reconstruction

We developed novel methods for digitization of color and shape of complex objects under clutter and occlusion, and applied them to a large collection of statues. Best paper at Digital Heritage 2013.

Interactive manipulation of Digital Michelangelo David on a light field display

Real-time rendering of 1Gtriangle (0.25 mm resolution) model on 35Mpixel display

Interactive exploration of S. Saturnino point cloud dataset

Model acquired using Time-of-flight laser scanner and reconstructed and rendered with CRS4 pipeline

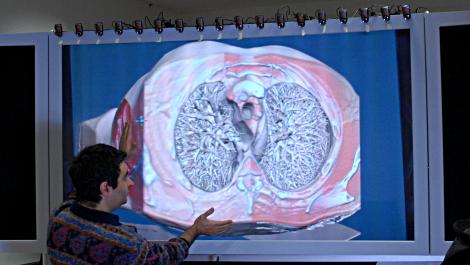

Interactive exploration of human CT scan on a light field display

Real-time out-of-core CUDA volume renderer working on 35Mpixel display

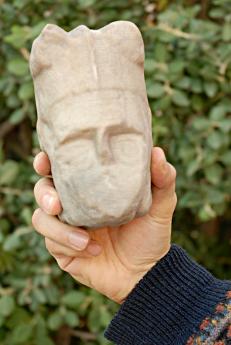

Physical 3D reproduction of a Mont'e Prama statue

Model acquired with 3D laser scanner and digital photography and reproduced with a ZCorp 3D printer

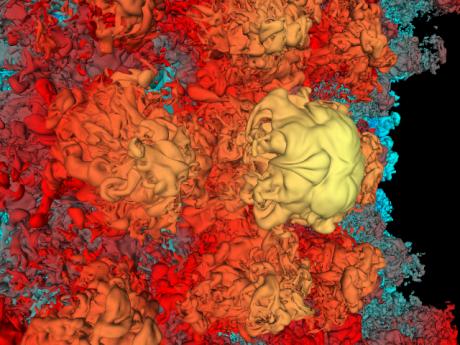

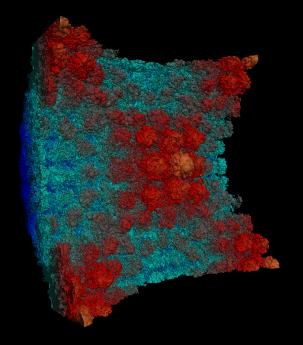

FarVoxels: exploration of complex isosurface on large screen display

Real-time inspection of a Richtmyer-Meshkov isosurface (472M triangles) rendered using CRS4 Far Voxels technique (SIGGRAPH 2005)

Illustrative CUDA volume rendering with view-dependent probes

Real-time inspection of a biological speciment CT dataset using an illustrative technique which preserves context information (The Visual Computer, 2010)

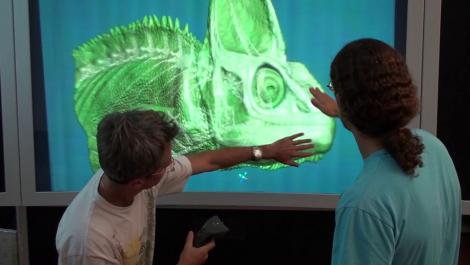

Multi-user exploration of a 64Gvoxel dataset on a 35Mpixel light field display

Users freely mix and match 3D tools creating view-dependent illustrative visualizations (The Visual Computer, 2010)

Interactive exploration of 64Gvoxel CT dataset on 35Mpixel light field display

Objects appear floating in the display workspace, providing correct parallax cues while delivering direction-dependent information to multiple naked-eye viewers (The Visual Computer, 2010)

Detailed view of a multi-gigavoxel volumetric model using MImDA compositing

Bone is given a higher importance and thus shines through previously accumulated material layers (The Visual Computer, 2010)

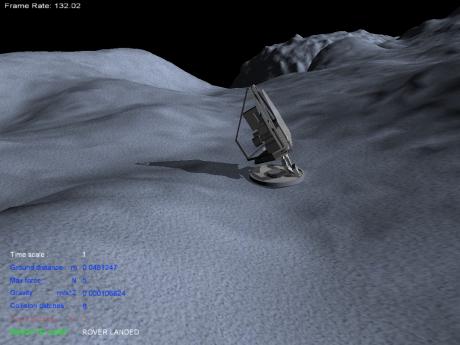

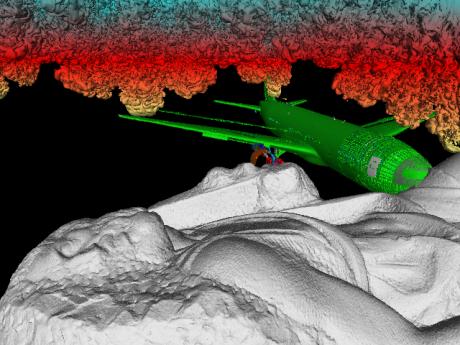

Frogbot ready to jump over asteroid Itokawa surface

Screenshot taken during a simulation using the asteroid 25143 Itokawa 5cm res dataset(about 220 Mtriangles). Collaboration with Johns Hopkins Applied Physics Labs.

Frogbot landing after a jump over asteroid Itokawa surface

Image taken during live simulation. The robot has the capability to store energy in a geared 6-bar spring/linkage system and to jump like a frog by releasing its legs. Collaboration with Johns Hopkins Applied Physics Labs.

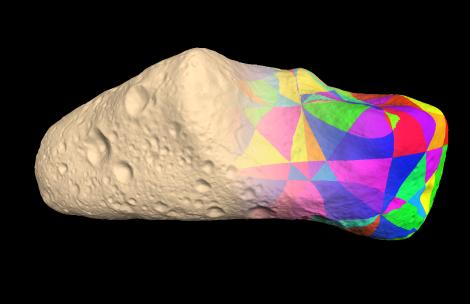

View-dependent rendering of 1.5 Gtriangles 433 Eros asteroid model

The blended right side of the picture shows the adaptive mesh structure with a different color for each patch. Each patch corresponds to about 16K triangles. Collaboration with Johns Hopkins Applied Physics Labs.

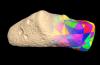

Animation of the NEAR probe approaching 433 Eros asteroid

Rendering the surface through such a variety of possible motions necessitates a rapidly adaptive multiresolution structure, as is provided by the multiresolution database. Collaboration with Johs Hopkins Appliad Physics Labs.

Interactive remote exploration of massive cityscapes

Real-time rendering of procedurally reconstructed ancient Rome using the BlockMap technique with ambient occlusion (VAST 2009)

Collaborative diagnostic session on a 7Mpixel light field display

Direct volume rendering on the light field display provides rapid volumetric understanding even using depth-oblivious techniques (The Visual Computer, 2009)

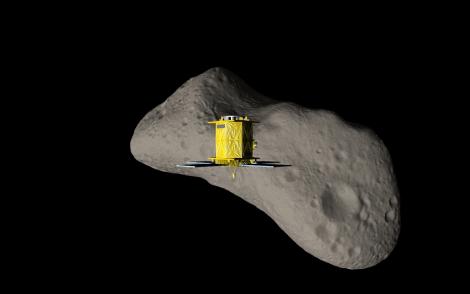

Surface reconstruction of Pisa Cathedral using streaming MLS

This high quality 140M triangle reconstruction was completed in less than 45 minutes on a quad core machine using 870MB/thread (Graphics Interface, 2009)

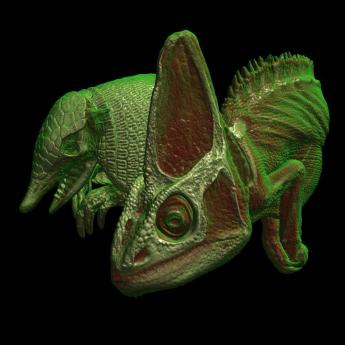

Streaming MLS reconstruction of Michelangelo's Awakening Slave

This high quality 220M triangle reconstruction was completed in less than 85 minutes on a quad core machine using 588MB/thread (Graphics Interface, 2009)

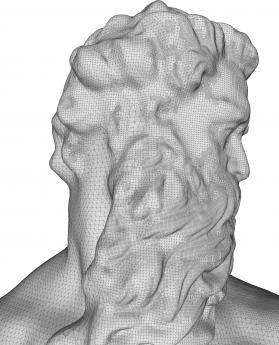

Surface reconstruction of Neptune using streaming MSL

Triangles of good aspect ratio are regularly distributed and at a density that matches the density of the input samples (Graphics Interface, 2009)

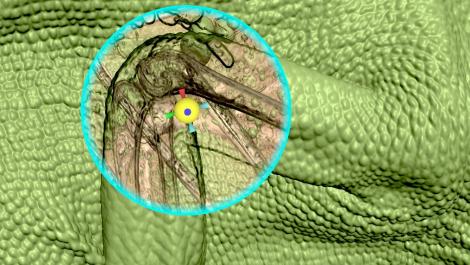

Context preserving focal probes for exploration of volumetric datasets

Our focal probes define a ROI using a distance function which controls the opacity of the voxels within the probe, exploit silhouette enhancement and use non-photorealistic shading techniques to improve shape depiction (3DPH 2009)

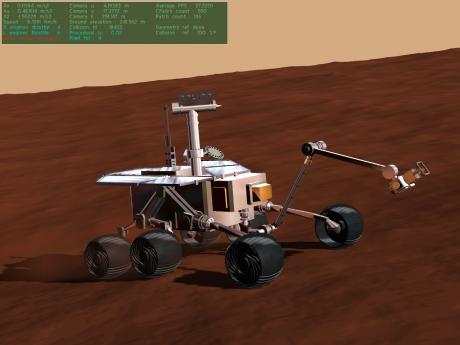

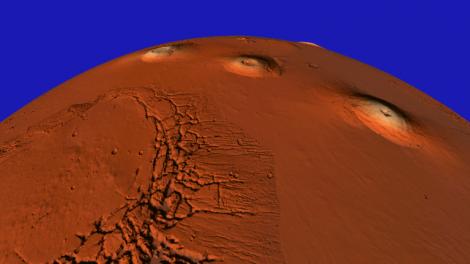

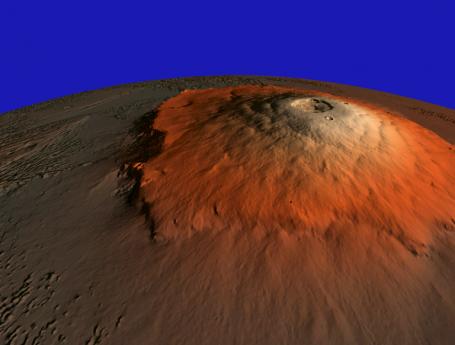

An Athena class rover explores planet Mars multi-resolution model

The Batched Dynamic Adaptive Meshes method has been integrated into a space robotics simulation software environment used for planetary surface robotics development. Collaboration with Johs Hopkins Appliad Physics Labs.

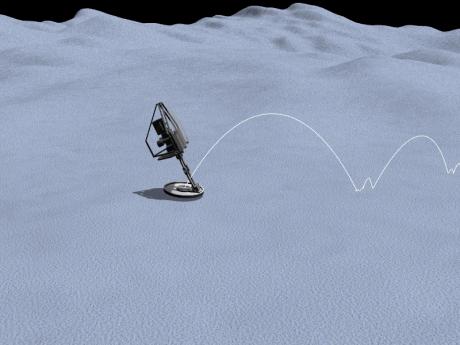

An Athena class rover explores the Moon 5cm res dataset

The Batched Dynamic Adaptive Meshes method has been integrated into a space robotics simulation software environment used for planetary surface robotics development. Collaboration with Johs Hopkins Applied Physics Labs.

Point-based local and remote exploration of dense 3D scanned models

Interactive exploration of Sant'Antioco point cloud dataset on a touch screen using a modified unicontrol interface (VAST 2009)

Real-time interactive inspection of a massive scalar volume

Real-time out-of-core volume rendering of 2Gvoxel datasets using single pass GPU raycasting (The Visual Computer, 2008)

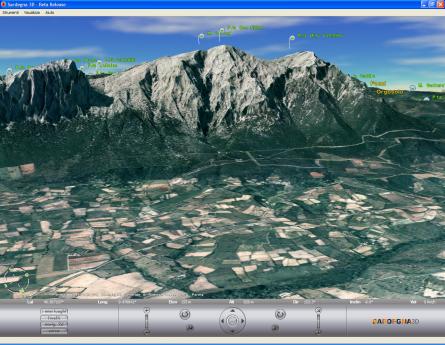

High-quality networked terrain rendering from compressed bitstreams

Interactive session with our internet geo-viewing tool streaming wavelet-compressed terrains over an ADSL 4Mbps network (WEB3D 2007)

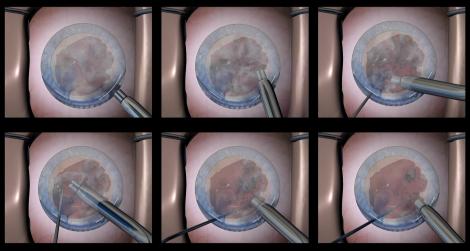

Real-time cataract surgery simulation for training

Real-time simulation of phacoemulsification using a mesh-less shape–based dynamic algorithm integrated with a simplex geometry representation and a smoothed particle hydrodynamics scheme (VRIPHYS 2006)

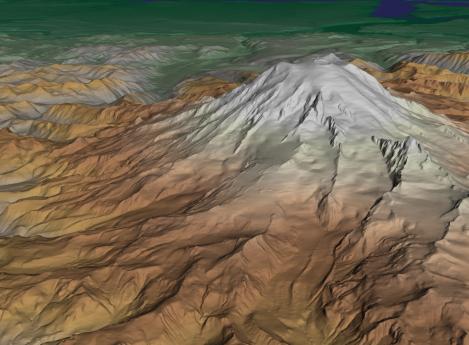

C-BDAM: compressed batched dynamic adaptive meshes for terrain rendering

Interactive exploration of a compressed multiresolution terrain representation. The approach provides overall geometric continuity and compression with support for maximum-error metrics (Eurographics 2006)

C-BDAM: compressed batched dynamic adaptive meshes for terrain rendering

Interactive exploration of a compressed multiresolution terrain representation. The approach provides overall geometric continuity and compression with support for maximum-error metrics (Eurographics 2006)

C-BDAM: compressed batched dynamic adaptive meshes for terrain rendering

Interactive exploration of a compressed multiresolution terrain representation. The approach provides overall geometric continuity and compression with support for maximum-error metrics (Eurographics 2006)

FarVoxels: interactive inspection of the St. Matthew 0.25mm dataset

Real-time inspection of the St. Matthew 0.25mm dataset (373M triangles) using our Far Voxels technique. The image is approximated using 1.5M voxels and 345K triangles. Data is presented at 1px resolution (SIGGRAPH 2005)

FarVoxels: interactive inspection of complex isosurface

Real-time inspection of the 472M triangle isosurface of the mixing interface of two gases from the Gordon Bell Prize winning simulation of a Richtmyer-Meshkov instability (SIGGRAPH 2005)

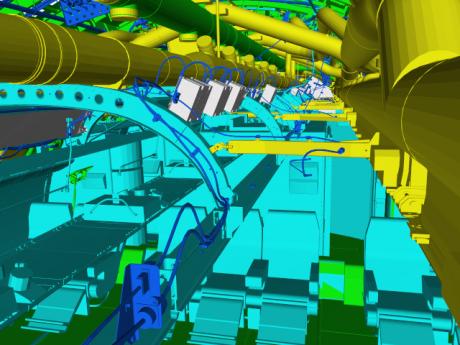

FarVoxels: interactive inspection of the Boeing 777 dataset

Real-time inspection of the full Boeing 777 CAD model (350M triangles). The image is approximated using 1M voxels and 3.4M triangles. Data is presented at 1px resolution (SIGGRAPH 2005)

FarVoxels: interactive inspection of a complex heterogeneous scene

Real-time inspection of a 1.2G triangle complex scene containing the St. Matthew 0.25mm resolution dataset, the LLNL Richtmyer-Meshkov simulation isosurface, and full Boeing 777 CAD model (SIGGRAPH 2005)

Layered Point Clouds: Richtmyer Meshkov isosurface rendering

Point-based rendering of the isosurface of the mixing interface of two gases from the Gordon Bell Prize winning simulation of a Richtmyer-Meshkov instability. The model consists of over 234M sample points (C&G 2004)

Adaptive Tetrapuzzles: David 1mm Rendering

Model rendered at +-1 pixel screen tolerance with 841 patches and 1172K triangles at 50 fps on a SXGA window with 4x Gaussian Multisampling, one positional light and glossy material (SIGGRAPH 2004)

crs4.it

crs4.it